From Sci-Fi to Reality: Exploring the Evolution of Artificial Intelligence

Artificial intelligence (AI) has long been a subject of fascination, growing from the creative imaginings of science fiction to real-world applications that permeate our daily lives. This article delves into the history and development of AI.

It charts its progress from its conceptual beginnings to the powerful tools we see today. Along the way, we’ll explore the various milestones, innovations, and challenges that have shaped the ai evolution.

In the Realm of Science Fiction

The concept of artificially intelligent robots can be traced back to early 20th-century science fiction.

Familiar characters like the “heartless” Tin Man from the Wizard of Oz and the humanoid robot that impersonated Maria in Metropolis captured the public’s imagination.

By the 1950s, a generation of scientists, mathematicians, and philosophers had become enamored with the idea of AI and began to explore its mathematical possibilities.

Alan Turing: The Birth of AI Theory

One such individual was Alan Turing, a young British polymath who pondered whether machines could think like humans. Turing proposed that humans use available information and reason to solve problems and make decisions, so why couldn’t machines do the same?

His 1950 paper, Computing Machinery and Intelligence, laid the groundwork for the development of intelligent machines and methods to test their intelligence.

Overcoming Early Hurdles

Despite Turing’s groundbreaking ideas, several obstacles stood in the way of AI’s progress. First, computers needed a fundamental change: they could execute commands, but they couldn’t store them. In other words, computers could be told what to do but couldn’t remember what they did.

Secondly, computing was extremely expensive. In the early 1950s, the cost of leasing a computer could reach up to $200,000 a month. This made AI research a luxury reserved for prestigious universities and big technology companies. To secure funding, researchers needed to provide proof of concept and gain support from high-profile individuals.

The Conference that Launched AI

In 1956, the groundbreaking Dartmouth Summer Research Project on Artificial Intelligence (DSRPAI) was organized by John McCarthy and Marvin Minsky. This historic conference gathered top researchers from various fields for an open-ended discussion on artificial intelligence, a term coined by McCarthy at the event.

Although the conference failed to establish standard methods for the field, it united attendees in the belief that AI was achievable and sparked the next two decades of AI research.

The AI Roller Coaster: Successes and Setbacks

From 1957 to 1974, AI flourished as computers became faster, cheaper, and more accessible. Machine learning algorithms improved, and researchers gained a better understanding of which algorithm to apply to specific problems.

Early demonstrations like Newell and Simon’s General Problem Solver and Joseph Weizenbaum’s ELIZA showed promise in problem-solving and spoken language interpretation. These successes, along with the advocacy of leading researchers, convinced government agencies like the Defense Advanced Research Projects Agency (DARPA) to fund AI research at several institutions.

Optimism was high, with Marvin Minsky famously telling Life Magazine in 1970 that “from three to eight years we will have a machine with the general intelligence of an average human being.” However, this enthusiasm soon waned as researchers encountered a mountain of obstacles, primarily the lack of computational power required for substantial progress. As patience dwindled, so did funding, leading to a decade-long slump in AI research.

AI’s Resurgence in the 1980s

In the 1980s, AI saw a resurgence due to an expansion of the algorithmic toolkit and a boost in funding. John Hopfield and David Rumelhart popularized “deep learning” techniques, allowing computers to learn from experience.

Edward Feigenbaum introduced expert systems, which mimicked the decision-making process of human experts. The Japanese government heavily funded expert systems and other AI-related projects as part of their Fifth Generation Computer Project (FGCP), investing $400 million between 1982 and 1990. Although the project’s ambitious goals were not fully realized, it arguably inspired a new generation of engineers and scientists.

The Quiet Progress of AI in the 1990s and 2000s

Ironically, as public interest waned and government funding ceased, AI thrived. During the 1990s and 2000s, several landmark goals in artificial intelligence were achieved. In 1997, IBM’s Deep Blue defeated reigning world chess champion Gary Kasparov, marking the first time a reigning world chess champion lost to a computer.

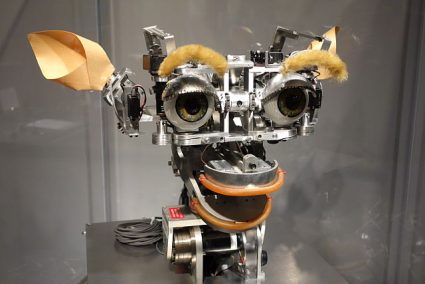

That same year, Dragon Systems introduced speech recognition software for Windows, further advancing the field of spoken language interpretation. Even human emotions were targeted, with Kismet, a robot developed by Cynthia Breazeal that could recognize and display emotions.

The Role of Time and Technological Advancements

One factor contributing to these breakthroughs was the rapid improvement in computer storage and processing capabilities. Moore’s Law had finally caught up with AI’s needs, enabling machines like Deep Blue and Google’s Alpha Go to achieve unprecedented feats.

This progress highlights the cyclical nature of AI research: AI capabilities saturate as computational power increases, and then researchers wait for Moore’s Law to catch up once again.

The Age of Big Data and AI’s Ubiquity

Today, we live in an era of big data, where vast amounts of information can be collected and processed. AI has already demonstrated its value in industries such as technology, banking, marketing, and entertainment. Even without significant algorithmic advancements, big data and massive computing power enable artificial intelligence to learn through brute force.

As Moore’s Law shows signs of slowing, the growth of data continues to accelerate. Future breakthroughs in computer science, mathematics, or neuroscience could potentially overcome the limitations of Moore’s Law and further propel AI’s evolution.

A Glimpse into the Future

As AI continues to advance, we can expect language processing to take center stage. Conversations with expert systems may become increasingly fluid, and real-time translations between two different languages could become commonplace. Driverless cars are likely to hit the roads within the next two decades, further showcasing AI’s impact on daily life.

In the long term, the ultimate goal is to achieve general intelligence, where machines surpass human cognitive abilities in all tasks. Although this may seem like a distant dream, it raises important ethical questions that must be addressed. As AI continues to evolve, society will need to engage in serious discussions about machine policy, ethics, and the implications of increasingly intelligent machines. For now, we can marvel at AI’s progress and watch as it continues to shape our world.

Related Artificial Intelligence

The Ethics of AI: Balancing Advancements with Responsibility

AI for the Environment: Save the Planet with AI

AI and the Job Market: Will Robots Take Over Our Jobs?

30 Careers That Surprisingly Use AI & Machine Learning

10 Virtual Girlfriend Apps to Build a Relationship

5 Steps To Becoming A Machine Learning Engineer

20 AI Websites That Can Transform Your Life

How To Become An Artificial Intelligence Engineer