The Dark Side of Data Engineering: 19 Things To Hate

Let’s explore the various aspects of data engineering that can be unappealing as well as some strategies for overcoming these issues.

Let’s explore the various aspects of data engineering that can be unappealing as well as some strategies for overcoming these issues.

Data architects design data-driven solutions that can be implemented practically by any team. Learn how to become a data architect.

Everyone has a calling in life. For everyone else, it’s a career in data engineering. Learn about this data science field in our guide.

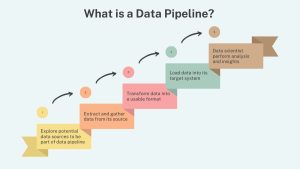

In data engineering, data pipelines provide the legwork for data collection and processing without the need for human intervention.

What is data engineering? This article will introduce you to the what, why, and how of data engineering including the tools and techniques.

Extract, Transform and Load (ETL) is an essential tool in the data engineering space to transform data to conform to business requirements.

For anyone interested in data science, it’s important to understand the data engineer role and its responsibilities, skills and qualifications.

We explore the data engineering tools that data engineers use to design infrastructure, build data pipelines, and perform analytics.

This guide provides an overview of the skills associated with data science roles: data scientist, data engineer, and data analyst.